Naive Bayes algorithm is a probabilistic classifier that operates based on Bayes’ theorem. It is Supervised Machine Learning Algorithms.

It is highly efficient in computation, making it suitable for real-time problems. The algorithm assumes that each attribute in a class is highly independent. It can handle both continuous and discrete attributes effectively.

The prediction model of Naive Bayes performs poorly when there is a strong dependency among the attributes in the dataset. For instance, consider a scenario where the eligibility of a loan applicant depends on various factors such as income, working period, purpose of the loan, previous loan history, and transaction history.

The formulation of Bayes’ theorem is as follows:

P(h/D) = (P(D/h)P(h)) /P(D)

Where:

P(h): the probability of hypothesis h being true. This is known as the prior prob-

ability of h.

P(D): the probability of the data. This is known as the prior probability.

P(h|D): the probability of hypothesis h given the data D. This is known as poste-

rior probability.

P(D|h): the probability of data d given that the hypothesis h was true. This is

Naive Bayes classifier using Python’s scikit-learn

Naive Bayes classifier using Python’s scikit-learn

Sample code to build a Naive Bayes classifier using Python’s scikit-learn library and calculate its accuracy.

# Import necessary libraries

from sklearn.model_selection import train_test_split

from sklearn.datasets import load_iris

from sklearn.naive_bayes import GaussianNB

from sklearn import metrics

# Load dataset (example using Iris dataset)

iris = load_iris()

X = iris.data

y = iris.target

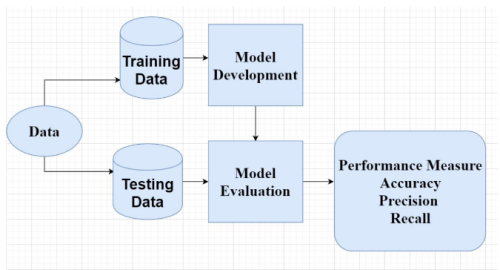

# Split dataset into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=42)

# Initialize Naive Bayes classifier

clf = GaussianNB()

# Train the classifier

clf.fit(X_train, y_train)

# Predict on the test data

y_pred = clf.predict(X_test)

# Calculate accuracy

accuracy = metrics.accuracy_score(y_test, y_pred)

print("Accuracy:", accuracy)

In this code:

- We first import the necessary libraries including scikit-learn for machine learning functionalities.

- We load a sample dataset (here, Iris dataset) and split it into training and testing sets.

- We initialize a Gaussian Naive Bayes classifier (assuming continuous features).

- We train the classifier using the training data.

- We use the trained classifier to predict on the test data.

- Finally, we calculate the accuracy of the model by comparing the predicted labels with the actual labels of the test data.

You can replace the Iris dataset with your own dataset according to your problem statement. Make sure to preprocess your data accordingly if needed (e.g., handling missing values, encoding categorical variables).

Advantages

- Simple, fast, and accurate prediction method.

- Very low computational cost.

- Efficient with large datasets.

- Performs well with discrete response variables.

- Applicable to multiple class prediction problems.

- Effective in text analytics tasks.

Disadvantages

- Assumes features are independent, which is often not the case.

- Zero posterior probability for classes without training data can lead to prediction issues, known as the Zero Probability/Frequency Problem.

Conclusion

Naive Bayes is a really simple but powerful algorithm. Even though machine learning has advanced a lot recently, Naive Bayes still works really well. It’s been used in lots of different things like understanding text and making recommendations.

Read also: Introduction To Decision Tree: A Supervised Machine Learning Algorithm