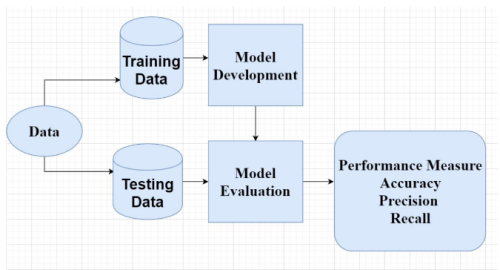

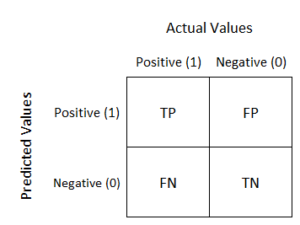

The confusion matrix is a model evaluation tool used in machine learning to assess the performance of classification models. It compares the predicted labels to the actual labels of a set of test data, and shows the number of true positives, false positives, true negatives, and false negatives for each class.

It can also be used to calculate various performance metrics such as precision, recall, and F1 score.

True Positives (TP):

These are the cases where the actual value in the dataset is positive (i.e., belongs to the positive class) and the model predicted value is also positive (i.e., predicted as belonging to the positive class).

False Positives (FP):

These are the cases where the actual value in the dataset is negative (i.e., belongs to the negative class) but the model predicted value is positive (i.e., predicted as belonging to the positive class).

True Negatives (TN):

These are the cases where the actual value in the dataset is negative (i.e., belongs to the negative class) and the model predicted value is also negative (i.e., predicted as belonging to the negative class).

False Negatives (FN):

These are the cases where the actual value in the dataset is positive (i.e., belongs to the positive class) but the model predicted value is negative (i.e., predicted as belonging to the negative class).

The accuracy of the model can be calculated using the values from the confusion matrix. The accuracy is defined as the ratio of the number of correct predictions to the total number of predictions.

The formula to calculate accuracy is:

Accuracy = (TP + TN) / (TP + TN + FP + FN)

Using the values from the confusion matrix, we can calculate several performance metrics, such as:

Precision: the fraction of true positives among all positive predictions made by the model.

Recall: the fraction of true positives among all actual positive cases in the dataset.

F1 score: the harmonic mean of precision and recall, which gives a balanced measure of the model’s performance.

To calculate these metrics, we can use the following formulas:

Precision = TP / (TP + FP)

Recall = TP / (TP + FN)

F1 score = 2 * ((precision * recall) / (precision + recall))

In summary, confusion matrix is a valuable tool for evaluating the performance of a classification model. By understanding the different elements of a confusion matrix and the performance metrics that can be calculated from it, we can better understand the strengths and weaknesses of our model, and optimize the model performance accordingly.