The term “Artificial Neural Network” is derived from Biological neural networks that develop the structure of a human brain. Similar to the human brain that has neurons interconnected to one another, artificial neural networks also have neurons that are interconnected to one another in various layers of the networks.

The basic unit of computation in a neural network is the neuron, often called as a node or unit. It receives input from some other nodes, or from an external source and computes an output.

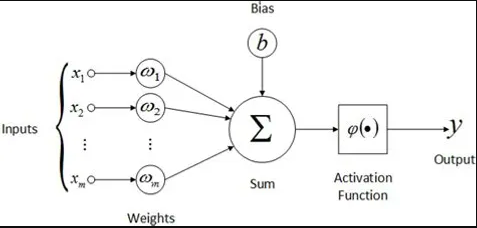

- Inputs: Inputs are the set of values for which we need to predict the output value. They can be viewed as features or attributes in a dataset.

- Weights: Weights are the real values that are associated with each feature which tells the importance of that feature in predicting the final value. Weights play an important role in changing the orientation or slope of the line that separates two or more classes of data points. Weights tell the relationship between a feature and a target value.

- Bias: Bias is used for shifting the activation function towards left or right, it can be referred to as a y-intercept in the line equation.

- Summation Function: The work of the summation function is to bind the weights and inputs together and find their sum.

- Activation Function: It is used to introduce non-linearity in the model.