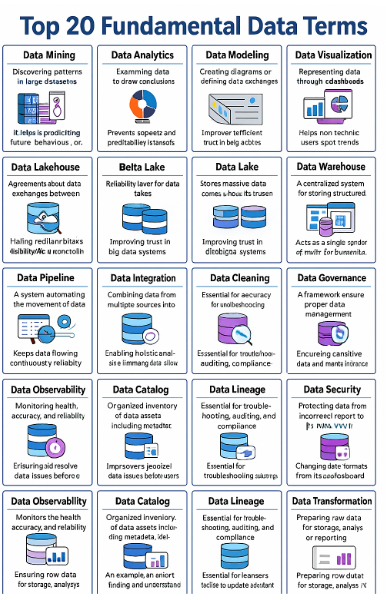

In the era of digital transformation, data is the new oil—but to extract value from it, professionals must understand the foundational terms that define how data is collected, processed, and used. This article explores the Top 20 Fundamental Data Terms, along with the tools commonly used for each, their importance, and real-world relevance.

1. Data Mining

Definition:

The process of automatically discovering patterns, correlations, and trends from large datasets.

Why It Matters:

It helps businesses predict future behaviors, detect fraud, or discover new market segments.

Common Tools:

- RapidMiner

- Python (Scikit-learn)

- Weka

- R (rpart, caret)

Example:

A bank uses data mining to detect suspicious transactions that might indicate fraud.

2. Data Analytics

Definition:

The practice of examining data to draw conclusions, identify trends, and support decision-making.

Why It Matters:

Transforms raw data into actionable business insights.

Common Tools:

- Microsoft Power BI

- Tableau

- Excel

- Python (Pandas, Matplotlib)

Example:

Retailers analyze sales data to decide which products to promote next season.

3. Data Modeling

Definition:

Creating diagrams or schemas that define how data is organized and related.

Why It Matters:

Helps design efficient databases and ensures data consistency and integrity.

Common Tools:

- dbt (Data Build Tool)

- ER/Studio

- Lucidchart

- SQL Server Management Studio (SSMS)

Example:

A company models customer, orders, and product data before building a sales database.

4. Data Contract

Definition:

An agreement between teams about the structure, format, and expectations for data exchanged between systems.

Why It Matters:

Prevents breaking downstream processes when data changes.

Common Tools:

- JSON Schema

- OpenAPI

- Apache Avro

- Protobuf

Example:

A development team agrees that every user record must include name, email, and signup date.

5. Data Visualization

Definition:

Representing data through charts, graphs, or dashboards for easier understanding.

Why It Matters:

Helps non-technical users quickly spot trends and anomalies.

Common Tools:

- Power BI

- Tableau

- Looker

- Google Data Studio

Example:

An executive views a sales dashboard showing regional performance with interactive charts.

6. Data Lakehouse

Definition:

A hybrid platform combining the flexibility of data lakes with the structure of data warehouses.

Why It Matters:

Allows running analytics and machine learning from a single platform.

Common Tools:

- Databricks

- Snowflake

- Delta Lake

- Apache Iceberg

Example:

A company stores both raw IoT sensor data and structured sales data in a lakehouse.

7. Delta Lake

Definition:

A layer on top of data lakes that adds reliability (ACID transactions), version control, and schema enforcement.

Why It Matters:

Improves trust and consistency in big data systems.

Common Tools:

- Databricks

- Apache Spark with Delta Lake

Example:

A data team tracks changes to customer records in a Delta Lake over time (time travel).

8. Data Lake

Definition:

A centralized repository that stores all types of raw data (structured, unstructured, semi-structured).

Why It Matters:

Stores massive data volumes without upfront modeling—ideal for ML and big data.

Common Tools:

- Amazon S3

- Azure Data Lake

- Hadoop HDFS

- Google Cloud Storage

Example:

An AI team stores raw customer reviews and logs for later sentiment analysis.

9. Data Mart

Definition:

A smaller, department-specific version of a data warehouse for quick access to focused datasets.

Why It Matters:

Improves speed and relevance of analytics for individual teams.

Common Tools:

- Snowflake

- Redshift

- SQL Server

- BigQuery

Example:

The HR team uses a data mart containing only employee records and payroll data.

10. Data Warehouse

Definition:

A centralized system for storing structured, cleaned data used for reporting and analysis.

Why It Matters:

Acts as a “single source of truth” for business intelligence.

Common Tools:

- Snowflake

- BigQuery

- Redshift

- Azure Synapse

Example:

Executives view company-wide KPIs pulled from the enterprise data warehouse.

11. Data Pipeline

Definition:

A system that automates the movement of data from source to destination, often with transformation steps.

Why It Matters:

Keeps data flowing continuously and reliably to where it’s needed.

Common Tools:

- Apache Airflow

- Azure Data Factory

- Talend

- dbt

- Fivetran

Example:

A pipeline loads web traffic data into a dashboard every hour for real-time analysis.

12. Data Integration

Definition:

Combining data from multiple sources into a single, unified view.

Why It Matters:

Enables holistic analysis by eliminating data silos.

Common Tools:

- Informatica

- Talend

- Microsoft SSIS

- Stitch

- Fivetran

Example:

Merging CRM data, billing records, and website traffic for a complete customer profile.

13. Data Cleaning

Definition:

Correcting or removing inaccurate, incomplete, or duplicate data.

Why It Matters:

Bad data leads to bad decisions—clean data is essential for accuracy.

Common Tools:

- OpenRefine

- Trifacta

- Python (Pandas)

- Excel

Example:

Removing duplicate customer records or fixing misspelled product names.

14. Data Governance

Definition:

A framework of policies, roles, and responsibilities that ensure proper data management.

Why It Matters:

Ensures data privacy, compliance, quality, and accountability.

Common Tools:

- Collibra

- Alation

- Informatica Axon

- Microsoft Purview

Example:

Ensuring GDPR compliance by labeling and restricting access to personal data.

15. Data Security

Definition:

Protecting data from unauthorized access, misuse, or breaches.

Why It Matters:

Critical for protecting sensitive data and maintaining customer trust.

Common Tools:

- AWS IAM

- Microsoft Defender

- Firewalls & Encryption Tools

- Symantec DLP

Example:

Encrypting customer credit card information and limiting access to finance teams only.

16. Data Observability

Definition:

Monitoring the health, accuracy, and reliability of data systems and pipelines.

Why It Matters:

Helps detect and resolve data issues before they impact users.

Common Tools:

- Monte Carlo

- Databand

- Bigeye

- Acceldata

Example:

An alert is triggered when a data pipeline fails to update a dashboard.

17. Data Catalog

Definition:

An organized inventory of data assets including metadata, definitions, and access info.

Why It Matters:

Makes data easier to find, understand, and trust across the organization.

Common Tools:

- Alation

- Microsoft Purview

- Apache Atlas

- Collibra

Example:

An analyst finds and understands a customer churn dataset using the data catalog.

18. Data Lineage

Definition:

Tracks where data comes from, how it’s transformed, and where it’s used.

Why It Matters:

Essential for troubleshooting, auditing, and compliance.

Common Tools:

- Apache Atlas

- dbt

- Talend

- Microsoft Purview

Example:

A data engineer traces an incorrect report back to its data source for correction.

19. Data Scalability

Definition:

The ability of data systems to handle increasing data volumes without performance loss.

Why It Matters:

Ensures systems grow with your business needs.

Common Tools:

- Snowflake

- Google BigQuery

- AWS Redshift Spectrum

- Apache Kafka

Example:

An e-commerce platform adds servers as sales traffic increases during holidays.

20. Data Transformation

Definition:

Converting data into a different format, structure, or value to make it useful for analysis.

Why It Matters:

Prepares raw data for storage, analysis, or reporting.

Common Tools:

- dbt

- Apache Spark

- Talend

- SQL

Example:

Changing date formats from “YYYY/MM/DD” to “DD-MM-YYYY” in a reporting system.

Conclusion

These 20 terms represent the backbone of data work across industries. Whether you’re building pipelines, designing dashboards, or protecting data, understanding these concepts will help you work smarter and make better decisions.

For Data Science online Practice Questions : click here