Transformers in Neural Networks – Introduction

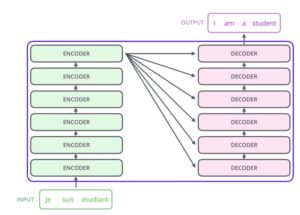

Recurrent Neural Networks – Background Recurrent Neural Networks (RNNs) have been widely used for sequence modeling problems in natural language processing (NLP) due to their ability to process sequential data one element at a time. In particular, Long Short-Term Memory (LSTM) and Gated Recurrent Neural Network (GRU) variants of RNNs have been shown to be […]

Transformers in Neural Networks – Introduction Read More »